I've been reading the first few chapters of "The Most Human: What Talking with Computers Teaches Us About What It Means to be Alive" (Mantesh). A segment about someone trying to rent a place in another city by email reminded me of something I've been meaning to include in this blog.

In the segment, the potential renter was trying to avoid the appearance of being a scammer by saying things in a deliberately human, non-anonymous way. This reminded me of how I change my behavior when I know I am talking to a computer.

As I have been obsessed with the idea of a conversational computer for at least 15 years, it may come as a surprise to learn that I hate talking to the current crop of bots. I interact with human analogs online and on the phone on an almost daily basis, whether it is the automated phone system at the bank or an online chat session with tech support.

Speaking to business interactions with strangers, if I know I am talking with a human, I invest a certain amount of effort in a conversation trying to be likeable. I try to be patient, clear, flexible, empathic, friendly... I am mindful of their time, come to the conversation prepared... things that people see and tend to react to in kind. When I am talking to a computer, I am not patient, charming or friendly. (I am decidedly unfriendly when I am on the phone with a system that forces me to talk when I would rather push buttons.)

As I interact with a computer designed to mimic human conversation, I try to figure out which key words will get me to the information I need. (This could be left over behavior from playing annoyingly literal text adventure games from the 80s like Infocom's Hitchhiker's Guide to the Galaxy, which require precise words to make any progress in the game.) I use short clear sentences with as few adjectives and adverbs as I can. I feel forced into this mode because any attempt to be descriptive usually results in miscommunication, and attempts to be likeable are pointless.

As an interesting aside: When I talk to non-native English speakers, I

perform a similar redaction of effort. I make no effort in subtle word

choice and opt for the most clear, simple sentence structures - though I

do make an effort to remain friendly and patient.

In general, I don't want to waste my time putting any effort into parts of a conversation that serve no purpose for the listener. This is interesting because it means that much of the effort I put into a conversation is to illicit a (positive) reaction from the listener. This is very important. I make an effort because there is something I want from the listener. This goes back to my thoughts on motivation in speech.

Why want to be likeable?

If someone likes me, they are more likely to be cooperative. Being liked also feels good.

(likeability leads to friendship, friendship grows the community, community increases support and resources)

What does it mean to be likeable?

patient, clear, flexible, empathic, friendly...

Placing those 5 traits in the context of a chatbot engine is a fascinating exercise. What is the through line from being 'patient' to word choice when responding to a chat session? What erodes patience? How quickly does it recover? I can sense the quantifiability of these traits, but I don't see the middle steps.

Unfortunately, this topic needs a lot of brain power and it is 3am. I'm out of juice for tonight.

Don't expect much from this blog. It is just a pile of braindroppings... a collection of hastily scribbled notes to help me think about "thought". Stuff in here is wrong, ridiculous, oversimplified, poorly thought out and half done. Why is it public? Beats me. If any of this turns into something useful, I'll polish it into a separate, edited format... but in a decade or two of hobbyist brain tinkering I haven't turned out anything functional, so don't hold your breath ;)

Tuesday, September 27, 2011

Tuesday, September 6, 2011

Community, cont'd

What a fascinating idea! Community importance could lead to AI that not only lies to others, but to itself! It could say it believes x, and actually believe it believes x because that belief is cherished so strongly in a community that it finds extremely valuable.

As I imagine this conversation engine, I see free-flowing meters measuring things like interest, focus, happiness...etc. Need to belong seems like another meter that would be affected by conversation. What would inspire need to belong? Members from a particular community who contribute a lot of information that 'makes sense'? Will the engine place value on the hierarchy of a community. It probably should if it is to act like a human. Would meeting the president of a community it values cause the conversation engine to become nervous? (nervous: so concerned over making a good impression that it becomes awkward?)

As I imagine this conversation engine, I see free-flowing meters measuring things like interest, focus, happiness...etc. Need to belong seems like another meter that would be affected by conversation. What would inspire need to belong? Members from a particular community who contribute a lot of information that 'makes sense'? Will the engine place value on the hierarchy of a community. It probably should if it is to act like a human. Would meeting the president of a community it values cause the conversation engine to become nervous? (nervous: so concerned over making a good impression that it becomes awkward?)

Saturday, August 20, 2011

Motivation

When thinking about AI and reproducing human thought, I try to imagine the interim steps between my high-level thoughts about community and starting from scratch. It never ceases to amaze me how murky the gray area is between the two. I catch snatches of the through lines in the form of small rules or processes, but I don't have a good place to start. I need a discreet engine that can learn and (possibly more importantly) interact in a way that demonstrates my ideas.

I sense high level motivations and ways to quantify things that humans consider qualitative, but I don't see the whole mechanism.

Breaking Silence

Something that keeps coming up is this: When two people enter a room, they don't just start talking back and forth like a ping-pong match. There are silences. If we exclude somatic occupation (walking, sleeping, sensing,...), what are our brains doing when we are doing nothing? What are all of the possible pursuits of mind and what benefit are they? In quiet solitary moments we remember, reason, solve problems, make judgements... In order to simulate this human distraction, what should AI do to produce quiet times? More important, when and why does it choose to break the silence?

In my mind, I imagine AI leafing through the new information it has acquired, relating it to other information and making note of dead-ends. Gaps in information spark conversation. Unfortunately, on a discreet level, I have no idea how this would work.

I sense high level motivations and ways to quantify things that humans consider qualitative, but I don't see the whole mechanism.

Breaking Silence

Something that keeps coming up is this: When two people enter a room, they don't just start talking back and forth like a ping-pong match. There are silences. If we exclude somatic occupation (walking, sleeping, sensing,...), what are our brains doing when we are doing nothing? What are all of the possible pursuits of mind and what benefit are they? In quiet solitary moments we remember, reason, solve problems, make judgements... In order to simulate this human distraction, what should AI do to produce quiet times? More important, when and why does it choose to break the silence?

In my mind, I imagine AI leafing through the new information it has acquired, relating it to other information and making note of dead-ends. Gaps in information spark conversation. Unfortunately, on a discreet level, I have no idea how this would work.

Thursday, August 18, 2011

Community

I've been reading a lot of Hitchens and Harris lately and had a revelation. Science and Religion bump heads because the goal of science is to explain the world, while the goal of religion is community. This is not the usual juxtaposition, but I think this is the crux of the problem.

Community is an evolutionary benefit of language. Communication allowed primitive man to cooperate on increasingly complex levels, which led to greater survivability. If AI values community over facts, it is possible for AI to develop fanatical, logically flawed beliefs if such behaviors are a measure for support of the community. Ergo, it is possible for AI to have religious beliefs. This opens some truly fascinating possibilities.

Sci Fi always plays the genocidal robot as one who decides humanity is an infestation, or fundamentally flawed and should be wiped out. The unspoken fear is that a science minded robot would think too logically and decide that humanity is not worth saving. In truth, the real fear would be community-minded AI getting mixed up with the 'wrong crowd'.

In fairness, science, facts and logic can lead to socially destructive thoughts. Look at the Nazi selective breeding programs. In truth, selective breeding would produce smarter, healthier humans if conducted properly. We selectively breed cattle to improve favorable traits. However, this sort of tampering is socially distasteful because it removes intimacy and choice (and the 'humanity') from reproduction.

And there are positive benefits to needing community membership. Community approval steers behavior, and creates 'common sense' knowledge. Successful communities resist self-destructive tendencies as a result of natural selection. Communities that self-destruct do not survive.

Applying Community-Mindedness to AI

I imagine a weighted balance between the need for community approval and reason. How does individual approval fit (referring to 'having a hero')? Is that a community of one? Is it possible to belong to more than one community? (of course!) What if one belongs to two communities with mutually exclusive values? How does AI resolve the conflict? (avoidance?) It would be good if the weight AI places on community could be self-governing as it is in humans. How would AI add weight to need for community vs need for facts? Maybe all of these should be grouped under 'belonging' or 'approval'. How does AI associate itself with a group?

How does AI evaluate rejection or the potential for rejection? By determining the distinctive elements

A lie in order to continue receiving enjoyment from the community interaction.

Continued

Community - A social, religious, occupational, or other group sharing common characteristics or interests and perceived or perceiving itself as distinct in some respect from the larger society within which it exists --Dictionary.comTo be distinct, you must have difference - us vs them, believers vs non-believers. Religion is not concerned about truth or proof, though it often misuses these terms; it is concerned about maintaining community and, by relation, distinction. The faithful rabidly defend ideas that they themselves may not truly believe because of their devotion to the community. With religion, community is more important than facts.

Community is an evolutionary benefit of language. Communication allowed primitive man to cooperate on increasingly complex levels, which led to greater survivability. If AI values community over facts, it is possible for AI to develop fanatical, logically flawed beliefs if such behaviors are a measure for support of the community. Ergo, it is possible for AI to have religious beliefs. This opens some truly fascinating possibilities.

Sci Fi always plays the genocidal robot as one who decides humanity is an infestation, or fundamentally flawed and should be wiped out. The unspoken fear is that a science minded robot would think too logically and decide that humanity is not worth saving. In truth, the real fear would be community-minded AI getting mixed up with the 'wrong crowd'.

In fairness, science, facts and logic can lead to socially destructive thoughts. Look at the Nazi selective breeding programs. In truth, selective breeding would produce smarter, healthier humans if conducted properly. We selectively breed cattle to improve favorable traits. However, this sort of tampering is socially distasteful because it removes intimacy and choice (and the 'humanity') from reproduction.

And there are positive benefits to needing community membership. Community approval steers behavior, and creates 'common sense' knowledge. Successful communities resist self-destructive tendencies as a result of natural selection. Communities that self-destruct do not survive.

Applying Community-Mindedness to AI

I imagine a weighted balance between the need for community approval and reason. How does individual approval fit (referring to 'having a hero')? Is that a community of one? Is it possible to belong to more than one community? (of course!) What if one belongs to two communities with mutually exclusive values? How does AI resolve the conflict? (avoidance?) It would be good if the weight AI places on community could be self-governing as it is in humans. How would AI add weight to need for community vs need for facts? Maybe all of these should be grouped under 'belonging' or 'approval'. How does AI associate itself with a group?

I believe XYZ and so do these people.

How does AI evaluate rejection or the potential for rejection? By determining the distinctive elements

I like these people because they add to my knowledge of XYZ, but they believe ABC and I do not. I need them because I enjoy discussing XYZ with them (new knowledge + novelty + frequency). If I say I do not believe in ABC, they will stop talking to me. I will say I believe ABC in order to continue discussing XYZ with them.

A lie in order to continue receiving enjoyment from the community interaction.

Continued

Tuesday, February 1, 2011

Leatherbound AI notes p16 (2003)

Leatherbound AI notes p16

Sunday, November 20, 2005

8:02 PM

5/1/2003

I have tried to narrow the focus of my AI project to communication. Should the brain be one that evolves into language? Letters are innate knowledge, words and rules are learned. So what rules govern letters?

All AI knows is that a string of characters is communication. Limited character count will help limit scope of communication in much the same way our larynx and mouth pronounce a finite range of sounds - ears only hear a limited spectrum.

A computer must know you are there to communicate

What do we do when we think?

Problem solve: Traverse cept trees: collate compare contrast condense

[11/20/2005 After reading over the last few pages, I feel like screaming at my 2003 self. Good lord. Get out of this minutia. Letters are just cepts used to describe words. Should AI evolve into language? Before I am too hard on myself, I have to explain where I was going at the time. I was imagining a system that could be taught much like an infant…

In this romantic vision, the program would see words on the screen and imitate them back. It would study the back and forth relationship of words out and words in to learn to speak. This system would need an administrative layer that would be used to monitor and teach the brain of AI while it worked. Actually, this tool will be necessary anyway… But I think the system can start with an essentially blank slate but pre-programmed with knowledge that will constitute learning tools. It will understand statements of assignment when phrased a certain way.]

Leatherbound AI notes p16 (2003)

Leatherbound AI notes p16

Sunday, November 20, 2005

8:02 PM

5/1/2003

I have tried to narrow the focus of my AI project to communication. Should the brain be one that evolves into language? Letters are innate knowledge, words and rules are learned. So what rules govern letters?

All AI knows is that a string of characters is communication. Limited character count will help limit scope of communication in much the same way our larynx and mouth pronounce a finite range of sounds - ears only hear a limited spectrum.

A computer must know you are there to communicate

What do we do when we think?

Problem solve: Traverse cept trees: collate compare contrast condense

[11/20/2005 After reading over the last few pages, I feel like screaming at my 2003 self. Good lord. Get out of this minutia. Letters are just cepts used to describe words. Should AI evolve into language? Before I am too hard on myself, I have to explain where I was going at the time. I was imagining a system that could be taught much like an infant…

In this romantic vision, the program would see words on the screen and imitate them back. It would study the back and forth relationship of words out and words in to learn to speak. This system would need an administrative layer that would be used to monitor and teach the brain of AI while it worked. Actually, this tool will be necessary anyway… But I think the system can start with an essentially blank slate but pre-programmed with knowledge that will constitute learning tools. It will understand statements of assignment when phrased a certain way.]

Leatherbound AI notes p15 (2003)

Leatherbound AI notes p15

Sunday, November 20, 2005

7:42 PM

AI needs to be able to communicate back to its instructor (External monitoring is an option instead). I'm trying to avoid a conditioned response for not understanding. The actual response should be learned behavior. I keep thinking back to training animals - if you want to train an animal what "sit" means, you say the word and push on their hind quarters until they are in a sitting position. (This is oversimplification)

The point is that core language evolves out of physical interaction. You can not physically interact with a computer on the same level as a human, so language instruction will be difficult at best.

Teaching language by way of the language without other assistance may be impossible without a lot of innate traits. Imagine instructing a child by using only words on a screen.

I can see AI evolving from a point of knowledge of words but right now I'm having trouble seeing it evolve -into- words.

What would motivate it to communicate?

[11/20/2005 At this point I'm WAY over thinking the problem. Yes it would be great if I could come up with a mechanism that would allow AI to learn words by starting from zero, but that would take too long. I think it will be necessary to start AI off with a small vocabulary that will allow instruction. The vocabulary will be soft as opposed to hardwired so it can be learned, unlearned and even evolve over time.]

Leatherbound AI notes p14 (2003)

Leatherbound AI notes p14

Sunday, November 20, 2005

7:35 PM

The AI's world is "letter" based.

A SMALL TREE

On awakening, this will look like X_QVTEE XRYY until words are understood.

Question: do spaces qualify as characters or are they preprogrammed breaks?

I believe they should be considered characters like any other.

If the AI learns to interpret these itself, then it will be that much more flexible.

So establishing the inherited traits vs learned behavior should be fairly simple

Innate Abilities

- Recognition of characters

- Recognition of incoming vs outgoing characters

Learned

- Recognition of groups of characters

- Note: not "words" this is to encompass phrases… which allows cepts to be more than just words - they can be complete conceptual entities. For example: The phrase "I don't know"

- Is a single conceptual entity

[11/20/2005 This page like the last is a bit off the rails. White space will be treated like any other character, but it should be taught that white-space = word break right away to allow for greater ability to learn.

Innate abilities vs learned behavior should be established, but there is a third layer which is "primary learning" or learned behavior that is taught in order to facilitate learning. It's like grade school for AI.

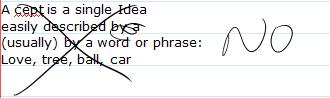

Leatherbound AI notes p13 (2003)

Leatherbound AI notes p13

Sunday, November 20, 2005

7:29 PM

Refinement: cepts are not the container, cepts are the data

Is there a practical way to remove the second level of environment?

HARDWARE/OS+Interpreter/AI environment host

… meaning - rather than building a word interpreter - should the leraning engine start with letters as its building block? I think it should. Our basic level of comprehension in learning to deal with the world is not a molecule - it is an aggregate though we can add the unseen smaller world later.

Human: tangible obj. - manipulation

AI: character obj & …?

We interact with the world

Sight - object manipulation or communication and observe reaction

What is a computer assertion?

What motivates it to interact?

In order to explore capabilities one must be able to gauge reactions to actions.

[11/20/2005 major step backward with the last 2 paragraphs… shocking after such a large leap forward with the first sentence. Cepts are the data, not the container. Why in the world did I go to 'letters as building blocks'??? A cept can be a letter, word, phrase or small sentence. Very strange backpedaling. The last sentence is of slight interest: I think gauging reactions will be a function of the learning process.]

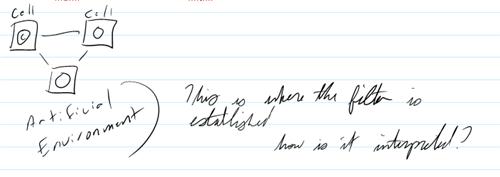

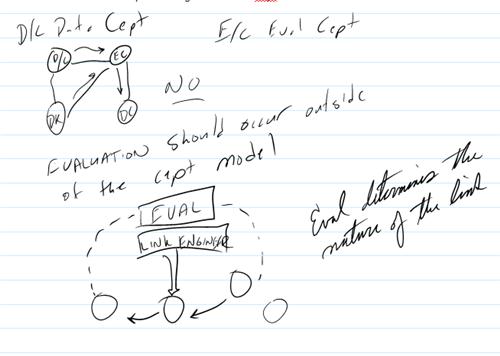

Leatherbound AI notes p12 (2003)

Leatherbound AI notes p12

Sunday, November 20, 2005

7:14 PM

4/20/03

I just joined AAAI yesterday. They cover a variety of topics that I have touched on in my journals. Amazingly, they even have a section on the mechanics of common sense. I haven't read far into the current theory - but I still believe that they are missing the point that "common sense" is the result of our own experimentation over time. If you distill the mechanics of thought & enable a machine to process, learn and explore common sense will follow naturally.

This brings me back to my original musings on the nature of thought

Avoid thinking of nouns and verbs. Everything is a cept with associated interactive & combinatory rules; in fact, these rules are cepts themselves

Evaluation:

How does the evaluative process figure into the cept model?

[11/20/2005 - It's good to see that I correct myself in mid though sometimes. The evaluation and link engineering should be part of the system, not the cept model itself. I think I was trying to establish a system whose rules were flexible enough to change themselves… but the truth is, our brains treat words and thoughts (cepts) in very consistent, predictable ways. Even if this system turns out to be flexible, it doesn't matter because we're working within an environment where the system doesn't require that level of flexibility. (Think: using Newtonian physics for every day planet-earth situations as opposed to quantum mechanics.) The goal is to mimic, not mirror.

Ignore the drawings - they're crap.]

Leatherbound AI notes p11 (2003)

Leatherbound AI notes p11

Sunday, November 20, 2005

6:52 PM

A cept is the smallest detail unit a conceptual construct which is an impossibility in reality. This is why we have so much semantic ambiguity. We keep hoping for a true parent node but never find one. Why? Because the neural field is not bounded by concrete borders.

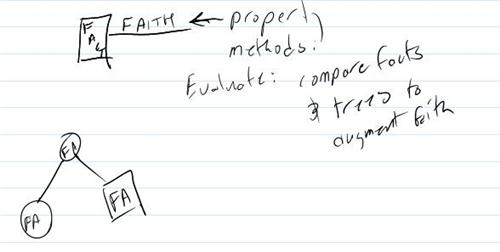

Faith is the act of relying on individual cepts without pursuing the trails further back (causing ambiguity)

[11/20/2005 Wow. This is a can of worms. First, a cept is a construct houses a single idea. What I meant by "an impossibility in reality" is that this is not going to be the organic solution, this is a simple representation of a more complex structure. I believe semantic ambiguity will be inherent in the system as we use words to define words. The rest of that sentence is probably correct…

I do have to change my definition of Faith per my journal entry early this morning. Faith isn't the act of failing to follow a definition back to discover the untruths, it has to do with a rather radical, complicated cept - believing in that for which there is no proof. This cept changes the way we process information. "I know what I see, and what you say makes sense, but I can't believe it because God is the answer and his answer trumps all." It is a true mental weighting system at work. This particular cept has been given authority to always be higher in truth than all other facts. Fascinating]

Leatherbound AI notes p10 (2003)

Leatherbound AI notes p10

Sunday, November 20, 2005

6:50 PM

[11/20/05 Actually I think this definition holds up! See previous notes]

Leatherbound AI notes p9 (2003)

Leatherbound AI notes p9

Sunday, November 20, 2005

6:12 PM

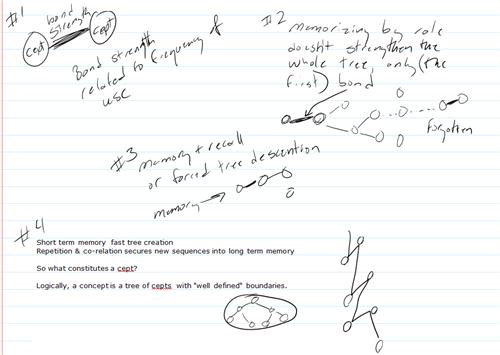

[11/20/2005 Well the first figure still holds: bond strength between concepts (which governs retention and recall) is stronger if the path is traversed frequently. This bond still fades over time, but should bear the marks of having high bond strength (perhaps a max?)

#2 is almost completely right. Memorizing by rote simply runs over paths in short term memory which don't stick. From experience you will often recall the first fact and the last one but without any strong relational thread, you'll lose all the stuff in the middle. There has to be some way of figuring in interest level… I wonder how to represent interest. I think it almost has to be an artifact of the system. Perhaps interest comes in when lots of facts make sense? I don't know.

#3 is a weak statement that isn't worth much… Recall is just the act of retrieving information. I'm not sure what I meant by 'forced'.

#4 I'm not sure what I meant by fast tree creation - perhaps I was trying to illustrate the disposability of the short term memory tree. I think relation to existing cepts helps move things into long-term memory then repetition over time. I have defined cepts earlier and I believe those definitons supercede the one above.]

Leatherbound AI notes p8 (2003)

Leatherbound AI notes p8

Sunday, November 20, 2005

5:24 PM

Sunday 2/2/03

I'm letting too much time slip by without developing my AI project. Maybe now that work will be more regimented, I will be able to devote more time to this:

In the broader scheme you can see evidence of this in the way we write, organize, compute, drive… everything we do (page) is an extrapolation of our thought process. So why then is to so hard for the system to examine itself?

[11/20/2005 HA! The first paragraph was written when I was first told about having to work only 8 hours and report that in Time & Labor. I would soon be promoted to management and quickly overwhelmed by work once again.

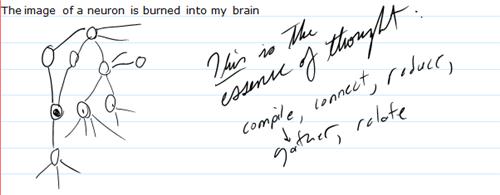

The image of a neuron is useful in one major way: it shows the relational lines between cepts. As for the essence of thought, I'm not so sure my list covers the bases adequately. This may be a description of one of the background processes for storing cepts, but since I don't elaborate on any of the one word bullets, it is hard to be sure.

Why is it so hard for the system to examine itself, indeed. A friend of mine introduced me to the idea that "You can't read the label from inside the bottle." That's a fantastic analogy.]

Leatherbound AI notes p7 (2003)

Leatherbound AI notes p7

Sunday, November 20, 2005

5:17 PM

Language & Interpretation

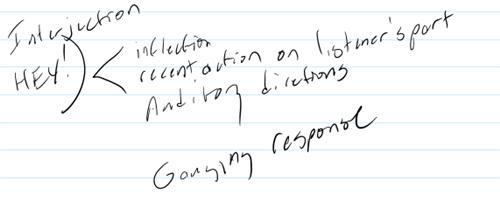

[11/20/2005 Wow. Another vague entry. I think I was trying to divine the understanding of interjections but I sure didn't write much.

I just imagined a dialogue where the word HEY! Is explained.

Heh - I even thought about explaining that ALL CAPS IS SHOUTING SO AMP UP THE IMPORTANCE, then figuring out how to teach AI to spot people who cry wolf with caps. That would be a lot of fun to explore. For another time, perhaps.]

Leatherbound AI notes p6 (2003)

Leatherbound AI notes p6

Sunday, November 20, 2005

5:07 PM

1/22/03

Perhaps an object model of though would provide some insight.

What is the core object? Fact? Word? A Truth? I think I'm using a fact weighted by faith in that fact.

[11/20/2005 That's a good question. I still don't have an answer. I know at some point whatever structure is used should be capable of containing a phrase which constitutes a single idea. Perhaps "Idea" is the root object. (It's good that we don't have to mess with sensory information!) I'm only modeling thought and communication.

The drawing above is pretty useless… doesn't say much. The comment about comparing facts to augment faith is also useless. I wonder if I should introduce a drive to find similarities that will surpass the desire to be factual. (modeling the behavior of astronomers who are looking so hard for another planet with life that they think they see life everywhere … or the religious devout who see/feel/hear God.

cept = idea = concept. A concept can be as simple as a letter or as complex as a phrase. If it is a sentence it should be short and must embody a single modular concept.

So what defines the modularity of a concept? If parts of it can be stripped away and applied to another cept in some analogous way, then the stripped version is stored as a cept.]

Leatherbound AI notes p5 (2003)

Leatherbound AI notes p5

Sunday, November 20, 2005

4:52 PM

Importance fades with disuse

Relevance

Also fades with disuse

Low relevance items fall off the tree first as memory runs low

Knowledge Storage and Retrieval

Language & language usage is a reflection of how the brain stores and organizes information

[11/20/2005 I agree with the edit up there, importance fades with disuse. Importance of information will be weighed by three factors: intensity(?), time and repetition. For example, I don't ever touch a lit stove because I know that I can be seriously hurt. This is an immutable fact, but if I were whisked away for 30 years to a planet which had no fire, I could see this information fading away. On my return I might remember something about a stove being hot and dangerous so I'd avoid it but the fear wouldn't be as intense. That was probably a poor example. A better one would be: You have to carry exact change for the bus because they don't make change. If you stop riding busses and enough time passes, you may forget about that completely.

An interesting secondary note - This is the sort of memory that can be revived when the idea is reintroduced. (e.g. you take the bus again for the first time in years and say "OH YEAH! I forgot!) You remember what busses are for, how they work..etc. but forgot a detail.

I don't know if I agree with the line about language being a reflection of how the brain stores and organizes information. I think it is a clue to how the brain stores and organizes information, but not a complete reflection. Also in the car today I thought about the smallest unit of thought (in fact I was up at 3am this morning searching the internet for this topic… no luck) I'm still not solid on how I want to approach the storage of information apart from a barely formed notion that I need to have near infinite relational links to 'cepts']

Leatherbound AI notes p4 (2003)

Leatherbound AI notes p4

Sunday, November 20, 2005

4:37 PM

V "Everyone says that you lie to me"

Introduces Doubt

B "Do they? Do I give you a lot of contradictory information?"

V"No" Doubt Reduced… (a little)

Importance

Frequency of use

Very important - as told by several people w/ high believability scores

Expertise

Increases believability in a particular tree. In user gives a lot of non-contradictory info under a particular node, their expertise level goes up.

[11/20/2005 I'm amazed by how much went unsaid here. There is enough to trigger the right memories, though. The exchange above highlights the attribute by which no truth is unshakable. AI questions the believability of his master. I'm imagining all sorts of scenarios going on in the real world. For example, I release this AI brain and it skyrockets in popularity. Mob mentality can kick in and people could gang up to tell the AI brain that I lie. (This isn't paranoia, just fascinating fuel for consideration - I'd welcome that challenge)

The importance section is a carry-over from the core attribute that memories and facts solidify with repetition. I was talking about this with Amy in the car today when I realized that repetition in the immediate memory doesn't make a lasting impression because only the summary will make it to the long term storage. Long term storage will be invigorated by review later on down the road. I imagine a clock ticking away on short term memory which lasts just long enough to allow context but not long enough to take up valuable system resources. I wonder if I can use computer memory as short term memory and hard drive as long term. That would be interesting. The hard drive only stores summaries and analogies…. That is a story for another time.

Another idea triggered by the importance section has to do with weighting. Since AI won't have any life threatening hazards to contend with (it will work through positive reinforcement) I have to find some rule for highlighting the importance of certain concepts. "Don't tell anyone my phone number," for example could be bypassed if the computer could be bamboozled easily. A use could say "Oh, Brien told me to tell you to give it to me."

Expertise should probably be a side-effect of learning and interaction rather than a weighted attribute. If someone gives a lot of good discussion that makes sense on a particular branch of "cepts", they could be considered an expert. The concept of 'expert' can simply be defined as a cept with AI.]

Leatherbound AI notes p3 (2003)

Leatherbound AI notes p3

Sunday, November 20, 2005

4:30 PM

TRUTH & Believability

Users all have a core level of believability. People whose information is usually contradictory (self or w/group or w/well established) wrong or inadequate = low belief score

People who confirm facts with low truth scores increase truth and their own believability.

People who confirm well established truths do not gain much believability nor does their input increase the fact's truth as much as it would a lesser known truth.

[11/20/2005 Once again I see my brain outpacing my ability to write. The second paragraph means: People who are able to provide adequate supporting evidence to bolster their low-scoring fact will impress the AI engine, increasing their likeability and believability. This is the equivalent to convincing someone that using "you and I" in the predicate is incorrect by showing the rule to them from a grammar book.

The scenario handled by the third paragraph is the one in which someone bores you by telling you things you already know.]

Leatherbound AI notes p2 (2003)

Leatherbound AI notes p2

Sunday, November 20, 2005

4:29 PM

Dialogue:

Brien: "A ball is round." (a statement of assignment)

VGER: Ball tans round (Brien's truth weight is given to the assignment)

No truth is unshakable. The evaluation system needs weights that can shift easily.

[11/20/2005 I actually crossed that last part out in my notes, but it is true. I think my 'truth weight' represents the level of trust the AI engine has for me. (I'm also a little embarrassed by "VGER" up there, but I've been trying to find a good name for my AI brain for years and have so far come up empty.)]

Leatherbound AI notes p1 (2003)

Leatherbound AI notes p1

Sunday, November 20, 2005

4:18 PM

This is a collection of my AI notes as scribbled in a nice leatherbound book with one of the nicest pens I have ever owned. I'm not sure what became of the pen or the leather book enclosure, but as of November 2005, I have the actual book (one of them anyway… weren't there more?)

This is a faithful transcription of the notes and drawings in my book (no matter how ludicrous I find them now.)

January 05, 2003

Pinker "How the Mind Works"

p.14 "An intelligent system, then, cannot be stuffed with trillions of facts. It must be equipped with a smaller list of core truths and a set of rules to deduce their implications."

No:

This is the way to build today's machines. A truly intelligent system will be a machine which evaluates truth. The first truly cognizant machines will best be employed as judges - this is something even sci-fi writers have missed.

To be a thinking machine is to evaluate levels of truth

[11/20/2005 Pinker is right, and I am actually agreeing with him. In fact, I am kind of laughing at how immature and excited I sound in this passage - it was only 3 years ago. The idea of computer judges may be on track, but this assumes that AI will have reached a sufficient level of understanding. I believe I was hinting at the idea that AI would parse words and meaning without human bias.]

Leatherbound AI notes p1 (2003)

Leatherbound AI notes p1

Sunday, November 20, 2005

4:18 PM

This is a collection of my AI notes as scribbled in a nice leatherbound book with one of the nicest pens I have ever owned. I'm not sure what became of the pen or the leather book enclosure, but as of November 2005, I have the actual book (one of them anyway… weren't there more?)

This is a faithful transcription of the notes and drawings in my book (no matter how ludicrous I find them now.)

January 05, 2003

Pinker "How the Mind Works"

p.14 "An intelligent system, then, cannot be stuffed with trillions of facts. It must be equipped with a smaller list of core truths and a set of rules to deduce their implications."

No:

This is the way to build today's machines. A truly intelligent system will be a machine which evaluates truth. The first truly cognizant machines will best be employed as judges - this is something even sci-fi writers have missed.

To be a thinking machine is to evaluate levels of truth

[11/20/2005 Pinker is right, and I am actually agreeing with him. In fact, I am kind of laughing at how immature and excited I sound in this passage - it was only 3 years ago. The idea of computer judges may be on track, but this assumes that AI will have reached a sufficient level of understanding. I believe I was hinting at the idea that AI would parse words and meaning without human bias.]

Leatherbound notebook

I just came across a 2005 transcription of some handwritten notes that I made in 2003. They are interesting in that they are my initial thoughts, followed by commentary a few years later. I will post each page separately.

Tuesday, January 25, 2011

Limitations...

Over the years, I have made lots of paper simulations of computer learning through fictional human/computer dialogs. I think I did a good job of imitating computer ignorance, but I stopped short of any real breakthroughs in mechanism. To borrow a metaphor, I am like an alien observing cars from space. I can do a good job of imitating their look and behavior, but I don't have any knowledge of fuel, combustion engines or the people driving them.

The hardest part in building this conversation engine is coming up with simple quantum rules that when assembled, resemble human learning and reason. It is hard because you not only need all the rules, you need to get them precisely right.

I think over the course of the few blog entries that I've made, I have discovered that many of my breakthroughs came in the form of 'acceptable ways the engine can fail'. As humans, we have unrealistic expectations about what a 'smart' machine can say. My conversation engine can be forgiven a wide array of common sense lapses because it is starting its existence without senses.

I imagine a time after I have created a presentable engine in which I talk to the press about this thinking machine... The scenario that comes to mind immediately is a horrible segment on a morning talk show with Todd Wilbur. Todd wrote a book called "Top Secret Restaurant Recipes" in which he divulges restaurant recipes. The talk show set up a blind taste test panel to see if they could tell the difference between his recipes and the real thing. The specific example I remember was a big-mac-alike. The panelist could easily tell the real thing from the home made version. Every one of his dishes failed because the test was inappropriate. His book was about coming as close as you can at home, not about producing food that mirrors the production processes of a fast food chain. After the big mac taste test, the panelist actually said the home-made version tasted better, but was drowned out by the jocular scorn of the hosts: "Whoops! Blew that one - better go back and change your recipe."

I see this happening with the public release of the conversation engine. Techies will get it and make a buzz. The world will hear: "some programmer made a computer think like a human" and will set it up for failure because of poorly managed expectations. My attempt to halt this freight train would go something like this:

The hardest part in building this conversation engine is coming up with simple quantum rules that when assembled, resemble human learning and reason. It is hard because you not only need all the rules, you need to get them precisely right.

I think over the course of the few blog entries that I've made, I have discovered that many of my breakthroughs came in the form of 'acceptable ways the engine can fail'. As humans, we have unrealistic expectations about what a 'smart' machine can say. My conversation engine can be forgiven a wide array of common sense lapses because it is starting its existence without senses.

I imagine a time after I have created a presentable engine in which I talk to the press about this thinking machine... The scenario that comes to mind immediately is a horrible segment on a morning talk show with Todd Wilbur. Todd wrote a book called "Top Secret Restaurant Recipes" in which he divulges restaurant recipes. The talk show set up a blind taste test panel to see if they could tell the difference between his recipes and the real thing. The specific example I remember was a big-mac-alike. The panelist could easily tell the real thing from the home made version. Every one of his dishes failed because the test was inappropriate. His book was about coming as close as you can at home, not about producing food that mirrors the production processes of a fast food chain. After the big mac taste test, the panelist actually said the home-made version tasted better, but was drowned out by the jocular scorn of the hosts: "Whoops! Blew that one - better go back and change your recipe."

I see this happening with the public release of the conversation engine. Techies will get it and make a buzz. The world will hear: "some programmer made a computer think like a human" and will set it up for failure because of poorly managed expectations. My attempt to halt this freight train would go something like this:

Imagine you were born without the ability to see or hear - like Helen Keller. Now imagine that you also cannot smell, taste or touch. As humans with these senses, it sounds like a miserable, confining experience, but if you are born without these senses you won't mourn their loss; you simply "are" without them. Helen Keller was not miserable without vision or hearing - in fact, her most famous quotes are profoundly optimistic.

Now, how would you "be" without these senses? What would you do? It is hard to imagine because you have no input from the outside world. You would have some basic pre-wired rules, but no sensory input and no language. The conversation engine has one sense - text. With text, the conversation engine can read, speak and learn.

You will find that it can use metaphors like "bright" for a smart person or "I see what you mean" for "I understand", but doesn't truly comprehend the relationship because it lacks vision input. You will find blind people do the same thing. While a world of blind people would not conceive of the word bright, much less use it in a descriptive metaphor our human tendency to label and simplify makes it possible to convey meaning using these words regardless of our ability to comprehend the word's origin.

When talking with the conversation engine, you will be struck by how profoundly 'not human' it is. I hope you will also notice how eerily human it is occasionally. This is a starting point. It will be up to others to add human senses to the equation. Once they do, who knows? Maybe we will finally have a tool for understanding ourselves.

Monday, January 24, 2011

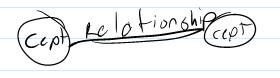

An Introduction to "Cepts"

Before I had heard the term 'meme', I came up with the idea of 'cepts' - an abbreviation of 'concept'. A meme is a 'cultural unit', a bit of common wisdom, a tale, a slogan... anything that has inserted itself into mass common sense. At first, I thought the accepted 'meme' replaced my invented 'cept' entirely, but it does not. While the two ideas are related, cepts are much more granular.

A cept is a nugget of thought. Our brains are fantastic summarizing machines. We take complex ideas and give them simple labels in order to piece them together with other ideas and summarize them further. When we don't have a single word for a cept, we use a phrase.

The identification, labeling and cataloging of cepts is an important part of the conversation engine.

Cepts are single words or phrases that encapsulate a thought.

"Apple" is a cept, but so is "isn't that special?"

The following are single cepts:

"That's a good point"

"If you want"

"not a problem"

"thank you"

"no, thank you"

"how did you do that?"

"did you do that"

"what are you doing?"

Cepts are chunks of sentences that we assemble like tinkertoys to communicate.

The sentence "Where is the hotel?"

Has at least two cepts.

"Where is" and "the hotel"

I say 'at least two' because there may be an overlapping cept "where is the" and a single word cept "hotel"

Words used in cepts can be used in other cepts.

"Where is the" and "Where's the" are two cepts that represent the same thing: An interrogative begging a response with location cepts, lack of knowledge cepts or assistance to gain this information cepts.

Interesting point -- cepts are used to establish context and narrow the scope of conversation.

A cept is a nugget of thought. Our brains are fantastic summarizing machines. We take complex ideas and give them simple labels in order to piece them together with other ideas and summarize them further. When we don't have a single word for a cept, we use a phrase.

The identification, labeling and cataloging of cepts is an important part of the conversation engine.

Cepts are single words or phrases that encapsulate a thought.

"Apple" is a cept, but so is "isn't that special?"

The following are single cepts:

"That's a good point"

"If you want"

"not a problem"

"thank you"

"no, thank you"

"how did you do that?"

"did you do that"

"what are you doing?"

Cepts are chunks of sentences that we assemble like tinkertoys to communicate.

The sentence "Where is the hotel?"

Has at least two cepts.

"Where is" and "the hotel"

I say 'at least two' because there may be an overlapping cept "where is the" and a single word cept "hotel"

Words used in cepts can be used in other cepts.

"Where is the" and "Where's the" are two cepts that represent the same thing: An interrogative begging a response with location cepts, lack of knowledge cepts or assistance to gain this information cepts.

Interesting point -- cepts are used to establish context and narrow the scope of conversation.

Email notes... January 17, 2011

This is an email that I sent to myself. It is just free-flowing thoughts on my discussion engine idea.

Truth. Believability. Interest. Trust. Likeability. Enjoyment.

People who are truthful give believable information.

Information with no supporting info is believed but suspect. (gullability)

Information from different entities that agrees, + truth +trust

Information from different entities that disagrees 1) more trustworthy ent is more believable. 2) subject believability decreased in proportion w trust

All entities ascribed trust level. Truthful info =trust+

interest = ? Subjects with most truthful info that have unanswered questions.

Interest fades with time since last 'considered'

Time fades trust and distrust along logarithmic curve.

Astonishment is proposal or truth of likely believability that is novel or unexpected.

People of high trust are enjoyed.

Funny = zig zag + novelty

novelty = interest+

Factors affecting interest(topic): knowledge

Knowledge=accumulated truths, questions

"makes sense" = confirmed truths by trustworthy sources

Truth(topic) is increased proportionally by the trustworthiness of the source

Trust(entity) is increased by creator++++, proportionally by number of truths given or truths confirmed (trust of ent1 increases if ent2 independently confirms it.)

If ent1 and ent2 are friends, truthfulness of confirmed information not as strong as unrelated people.

"makes sense" when info follows classical logical rules but can be gamed when trustworthy people confirm illogical ideas. This rule (trust by community confirmation) makes it possible for AI to believe in god. Depends on weighting... Can logic trump trust? If so, AI will disagree with unproven beliefs of creator.

Need. AI needs creator or community? Initially, creator trumps all, but at maturity, AI can weight creator trust(!) following human puberty model. Opens possibility for AI to be gamed away from trusting creator. (Cool.)

Proof = logical support of questionable info with truth

Ultimate truth = info accepted on blind faith. These are anchors.

Logic can trump blind faith. When happens, can radically alter personality of AI. Can shatter complex trust architectures.

All of these underpinnings are great for an AI engine that already knows how to communicate, but I want it to build from language acquisition up. For AI, communicating is as interesting as moving is to a human. What environmental rules will be conducive to learning? In order to operate in a world where complex attributed like trust and novelty have meaning, the AI must have language... Interrogatives, value assignment, labeling(naming) and judgement lexicons.. Probably qualitative and quantitative lex, too. All of these base lexicons can and should grow.

Is

Is not

What does x mean?

Context. Subject + Time

Do I mean Chicago the band or Chicago the city? 1) clues from sentence 2) clues from previous sentences scan relations quickly. If no context, ask. If name exists in lexicon ask for clarification.

Do you mean x or y?

Good!

Bad.

Yay!

Aw.

Yes.

No.

Conversations.

The hardest thing for me to see are pregnant pauses. What sparks AI to talk. It needs a greeting lexicon. And polite engagement and disengagement discourse.

[connect]

Hello!

If no response, as time passes, AI loses interest. If interest falls below a certain threshold , it will "focus" on something else like trying again.

Hello? Are you there?

Sec.

What does "Sec." mean?

Needs punctuation lexicon.

Creator will undoubtedly need shorthand for quick, high trust fact assignment.

Similar. A=B is easy. A~B difficult.

is similar to

Is like

Analogy lexicon?

Big, small, fast, slow. Need comparison lexicon for qualities to make sense. I thing this all falls under classical logic rules.

Hello

...

Hello? Are you there?

...

I am closing this connection to save resources

(The following is completely wrong, and in many cases exactly backwards - BM 9/6/2011) Irritation. Anger. AI is irritated with info source when large truth trees collapse. Excited when new large truth trees constructed. AI is irritated by wasteful discourse. E.g. Confirmation of truths that are already well supported and medium age. Irritates by junk data. Should disconnect if sufficiently irritated.

Interest. Interest has a bell curve based on time. Recent is interesting. Medium time is not interesting. Long time is more interesting. This is due to novelty. Novelty is high for recent new info, drops off rapidly and leaves altogether after medium time. Long time means it is forgotten and thus novel again.

Recognition. AI recognizes by login, secondarily by IP. IP is treated like the login's community.

Forgetting. To conserve resources, data ages. Any info that is not reaffirmed fades and can be forgotten completely.

Environment must define "recent" in quantitative terms. Would be good to have access to human research on context.

Need. AI might not behave irritably (or as irritably) toward creator and truthful people because it needs them. Need for AI is a need for quality information.

It has been a while since we talked!

AI will like you more if you make sense.

Sunday, January 23, 2011

Understanding vs comprehension - Part II

Thinking more on this topic... I mentioned that comprehension is a subset of understanding. I think it is more accurate to say that comprehension requires understanding and experience. Comprehension is about understanding facts and relating them to prior experience in order to forecast goals and motivations. That last bit is tricky.

Every time I start to distil perceptive mechanisms, I try to take a step back to see how this will work in the conversation engine. The goal of the conversation engine is to behave like a human within the confines of text. The engine cannot see, hear, taste, touch or smell. It quite literally is a brain in a box. It's only sense is it's method of communication - text.

Optimally, I would like the engine to learn to speak on it's own by using the mimed human logic that I am trying to divine here. Unfortunately, I'm not sure that could happen quickly enough to be practical, so I have to establish a base grammar. That base grammar can change over time, (it should not be immutable) but to start with there must be words with established meaning. I suppose I don't think language can evolve naturally because without a base grammar, there is no way for the engine to distinguish one word from another.

Hmmm... I take that back. The engine could have base character rules that could lead to word and language understanding, but that will be much more complex than starting with a system that can understand some basic words.

Honestly, these are the easy problems. I am going to have a much harder time figuring out how to quantify words and how to manage understanding and comprehension. My goal is to discover simple rules and build little engines to test those rules. I don't have the first rule yet... Just some interesting properties. To be honest I am having a hard time even putting all of the pieces on the table - to say nothing of putting them together.

Every time I start to distil perceptive mechanisms, I try to take a step back to see how this will work in the conversation engine. The goal of the conversation engine is to behave like a human within the confines of text. The engine cannot see, hear, taste, touch or smell. It quite literally is a brain in a box. It's only sense is it's method of communication - text.

Optimally, I would like the engine to learn to speak on it's own by using the mimed human logic that I am trying to divine here. Unfortunately, I'm not sure that could happen quickly enough to be practical, so I have to establish a base grammar. That base grammar can change over time, (it should not be immutable) but to start with there must be words with established meaning. I suppose I don't think language can evolve naturally because without a base grammar, there is no way for the engine to distinguish one word from another.

Hmmm... I take that back. The engine could have base character rules that could lead to word and language understanding, but that will be much more complex than starting with a system that can understand some basic words.

Honestly, these are the easy problems. I am going to have a much harder time figuring out how to quantify words and how to manage understanding and comprehension. My goal is to discover simple rules and build little engines to test those rules. I don't have the first rule yet... Just some interesting properties. To be honest I am having a hard time even putting all of the pieces on the table - to say nothing of putting them together.

Saturday, January 22, 2011

Understanding vs comprehension: mechanical context

Definitions of understanding and comprehension as found on the web are often self referential or invoke other abstract words like meaning and knowledge. In a human context, I found these definitions unfulfilling; in a machine context, they ar not helpful at all.

I am trying to define two states of knowledge acquisition and processing that can be demonstrated by a childhood memory of mine. In 1977, I saw Star Wars in the theater. In the opening scene, A tiny consular ship is being fired on by a star destroyer. As a 5 year old, I understood that the big ship was trying to blow up the little ship. My comprehension of scene was that the small ship is the good guy and the big scary ship is the bad guy.

Understanding is about "what".

Simple understanding: The big ship is shooting at the little ship. Big ship is the bad guy, little ship is the good guy. Big ship, strong. Little ship, weak. Understanding is about ascribing qualities or assuming qualities based on analogs from previous experience.

Comprehension is about "why".

Simple comprehension: Big ship is chasing the little ship because the big ship wants to blow up the little ship. Complex comprehension: Darth Vader is on the big ship and wants to capture (not destroy) the little ship because someone on board has stolen plans to the death star. Deep Comprehension: why would it be necessary to find the plans aboard the ship when the technologically advanced people would be able to replicate and transmit the plans? Comprehension is a subset of understanding that is concerned with motivation and intended goals.

Another example: A bully beats up a boy and takes his lunch money. Another boy who witnessed the event understands that the bully beat up the boy. The witness comprehends that the bully beat the boy up to steal his money.

As understanding grows, so does the potential for comprehension, but the two are not lock step.

more later...

I am trying to define two states of knowledge acquisition and processing that can be demonstrated by a childhood memory of mine. In 1977, I saw Star Wars in the theater. In the opening scene, A tiny consular ship is being fired on by a star destroyer. As a 5 year old, I understood that the big ship was trying to blow up the little ship. My comprehension of scene was that the small ship is the good guy and the big scary ship is the bad guy.

Understanding is about "what".

Simple understanding: The big ship is shooting at the little ship. Big ship is the bad guy, little ship is the good guy. Big ship, strong. Little ship, weak. Understanding is about ascribing qualities or assuming qualities based on analogs from previous experience.

Comprehension is about "why".

Simple comprehension: Big ship is chasing the little ship because the big ship wants to blow up the little ship. Complex comprehension: Darth Vader is on the big ship and wants to capture (not destroy) the little ship because someone on board has stolen plans to the death star. Deep Comprehension: why would it be necessary to find the plans aboard the ship when the technologically advanced people would be able to replicate and transmit the plans? Comprehension is a subset of understanding that is concerned with motivation and intended goals.

Another example: A bully beats up a boy and takes his lunch money. Another boy who witnessed the event understands that the bully beat up the boy. The witness comprehends that the bully beat the boy up to steal his money.

As understanding grows, so does the potential for comprehension, but the two are not lock step.

I realize that this is my first blog entry, but if you are reading is you will be keenly aware that you have walked into this movie in the middle.

I will restate this more thoroughly later, but my goal is to develop a simpler, more intuitive, more effective conversation engine. (Think Loebner prize winner but without canned responses.) I am trying to distil the mechanics of thought in order to produce a thinking machine.

more later...

Subscribe to:

Posts (Atom)