Leatherbound AI notes p16

Sunday, November 20, 2005

8:02 PM

5/1/2003

I have tried to narrow the focus of my AI project to communication. Should the brain be one that evolves into language? Letters are innate knowledge, words and rules are learned. So what rules govern letters?

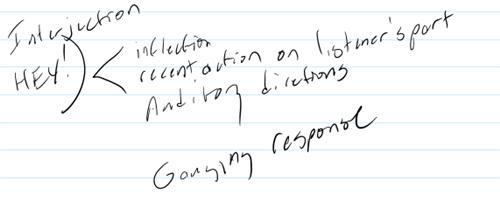

All AI knows is that a string of characters is communication. Limited character count will help limit scope of communication in much the same way our larynx and mouth pronounce a finite range of sounds - ears only hear a limited spectrum.

A computer must know you are there to communicate

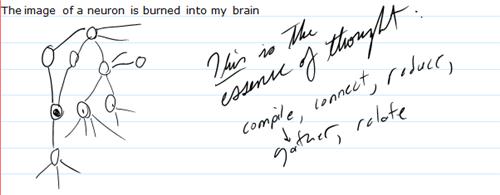

What do we do when we think?

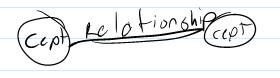

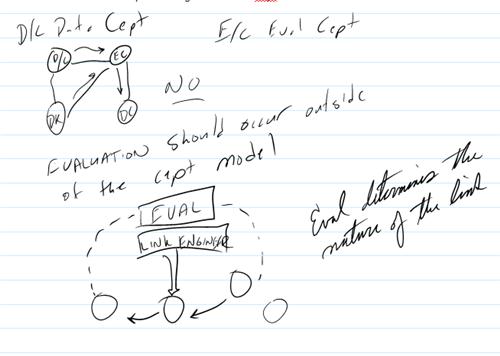

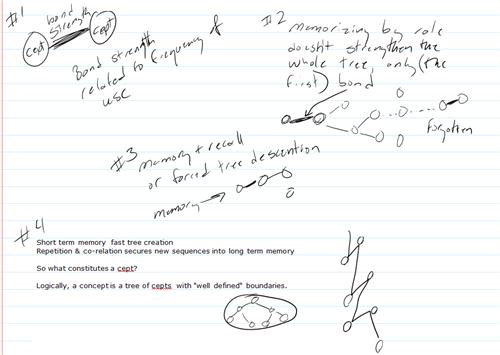

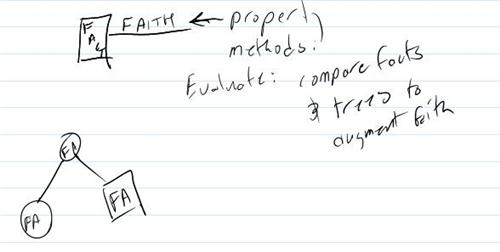

Problem solve: Traverse cept trees: collate compare contrast condense

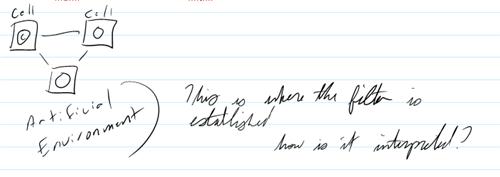

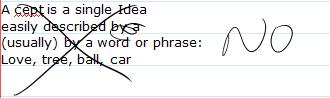

[11/20/2005 After reading over the last few pages, I feel like screaming at my 2003 self. Good lord. Get out of this minutia. Letters are just cepts used to describe words. Should AI evolve into language? Before I am too hard on myself, I have to explain where I was going at the time. I was imagining a system that could be taught much like an infant…

In this romantic vision, the program would see words on the screen and imitate them back. It would study the back and forth relationship of words out and words in to learn to speak. This system would need an administrative layer that would be used to monitor and teach the brain of AI while it worked. Actually, this tool will be necessary anyway… But I think the system can start with an essentially blank slate but pre-programmed with knowledge that will constitute learning tools. It will understand statements of assignment when phrased a certain way.]